Deep-learning-based precipitation observation quality control

by

Yingkai Sha,

D.J. Gagne,

G. West, and

R. Stull

In British Columbia, gauge networks are deployed in several key regions; they provide measurements of rainfall and play important roles in making operational flood warnings.

Although useful in general, rain gauges can be unreliable: their measurement quality is impacted by the harsh mountain weather, structural damage, and technical issues. Before using rain gauge measurements as “truth”, their poor quality values should be identified and removed.

Well-maintained gauge networks, for example, the BC Hydro network, has manual quality control routines. This ensures the accuracy of rainfall measurements, however, requires a high amount of human power – from 2016 to 2018, roughly 30% of hourly rain gauge measurements were judged as poor quality by human experts.

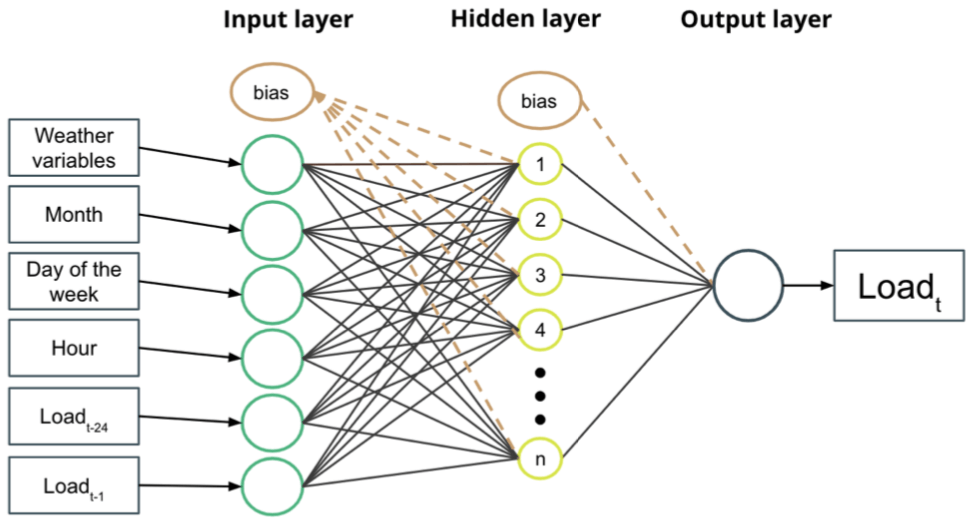

For providing accurate and timely rainfall estimates, we are collaborating with BC Hydro on developing automated quality control algorithms. The algorithm is experimental, and we have made progress on it. Currently, our algorithm can be implemented on a near-real-time basis, and its performance is 85% relative to a human expert.